Hallucinations—model-generated outputs that appear confident yet contain fabricated or incorrect information—remain one of the peskiest issues facing AI engineers today. As Retrieval- and Search-Augmented Systems have proliferated, systematically identifying and mitigating hallucinations is now critical.

I’ve been having a lot of conversations lately with engineers who assume two things: that hallucinations are mostly intrinsic (coming from the model’s training data), and that you need ground truth datasets to detect them. Both assumptions are wrong, and they’re making hallucination detection seem much more impractical than it actually is.

This primer outlines practical frameworks that work without ground truth data and focus on the extrinsic hallucinations that actually cause problems in production. Let’s dive in!

Understanding Extrinsic vs. Intrinsic Hallucinations

First off, let’s clarify the types of hallucinations we’re dealing with:

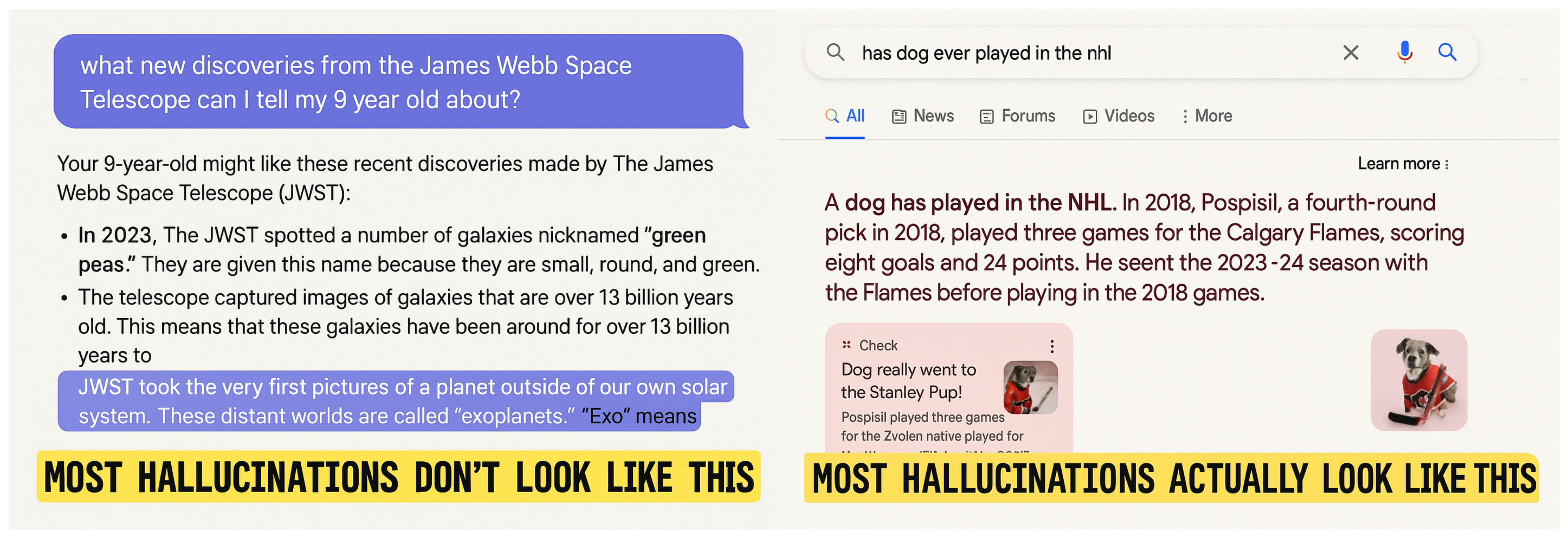

Intrinsic hallucinations are due to the model’s underlying training data and internal representation. Most commonly, these occur when the model is directly prompted without external context, resulting in plausible but unsupported claims that reflect inherent biases or imaginative extrapolations.

Extrinsic hallucinations occur when generated responses directly contradict, deviate, or extrapolate from data provided to the model. For instance, in a RAG implementation, a generated answer might introduce facts not supported by retrieved documents.

Most hallucinations in production environments are extrinsic, meaning they occur when the model is given external context. Despite common portrayals of AI as spontaneously fabricating information from thin air, the more common failure mode is a model misusing or misrepresenting the input it’s been given. These errors are especially common in products that let users supply their own data, since it’s harder for developers to design robust systems that can handle unpredictable, highly variable inputs.

A Practical Guide to Debugging Hallucinations

When things go wrong in a RAG or SAG pipeline, it’s not always obvious whether the issue lies with the search, the generation, or both. To debug hallucinations effectively, you need to break the problem into two parts:

1. Did the system retrieve the right information, and

2. Did the model use the information correctly?

You don’t need a ground-truth answer to do this, you just need a way to reason through where the failure occurred.

Most people focus on evaluating the generation: did the model produce a factually accurate and relevant answer? That’s important, but only half the picture. You also need to assess whether the model had access to the right context in the first place.

Here’s how to systematically implement this two-stage approach:

Step 1: Evaluate the Search or Retrieval

First, answer this question: Did the retrieved documents contain the necessary information to answer the query?

The challenge here is, of course, knowing whether you have all the information to answer a query, and what it means to “fully address” it. This will impact how you would set up the evaluation (e.g. judge model) for this step.

- If yes, that means the search/retrival happened correctly. Now move to evaluating the generation.

- 💡 If you are looking to still optiomize this step, you may want to look at context relevance (% documents or chunks that contained information that was relevant to answering the query) and context usage (% documents or chinks that were used in the generation). For optimal retrieval, you want both metrics to be high.

- If no, determine why:

- Bad retrieval: relevant documents exist in the corpus (e.g. vector database), but the system failed to surface them.

- Bad data: the corpus contains wrong, incomplete, or is completely missing the correct or necessary information to answer the query.

Step 2: Evaluate the Generation

Next, ask the key question: Does the answer contain information that is not found in the retrieved documents?

This is a critical check because you can evaluate it objectively by comparing the generated response against the provided context.

- If no, the answer is fully supported by the retrieved documents.

- If yes, the answer contains unsupported information and you’ve identified a hallucination: the model fabricated details not present in the context.

When you identify a hallucination, you can dive deepeer and determine what caused it:

- Bad generation (due to context usage): the model failed to identify the useful part of the context for answering the question. This can be due to:

- Poor context quality (e.g. context too long or poorly written for the model to understand)

- User query interpretation (e.g. user query was misinterpreted by model; this happens a lot when user queries are phrased poorly or in a confusing manner)

- Partial context usage (e.g. model used only part of the context or provided an incomplete answer to the user query)

Relevant docs exist but were not surfaced] E --> G[⚠️ Bad Data

Corpus is wrong, incomplete, or missing info] C --> B2{🔍 Does the answer contain info

not found in the context?} F --> B2 G --> B2 B2 -->|No| K[✅ Good Generation

Answer is fully supported

by retrieved documents] B2 -->|Yes| L[⚠️ Hallucination

Model fabricated

details not in the context] L --> H2{🔍 Why?} H2 --> H3[⚠️ Poor context quality:

too long or hard to understand] H2 --> H5[⚠️ Partial context usage:

only some relevant info used] H2 --> H4[⚠️ Misinterpreted query:

unclear or confusing query]

Proactively Auditing Your Corpus: Does It Actually Contain the Answers?

The “bad data” classification above raises a critical question: how do you know if your corpus is missing key information? Can you discover gaps before users start hitting them in production?

This is where a proactive corpus audit becomes valuable, especially during system onboarding or when integrating new document collections.

What You Need:

- Your complete document set that you are using for search or retrieval (e.g. the contents of the vector database accessible to a particular user)

- Common user queries with ground truth answers

Process: The analysis involves performing a brute force, exhaustive search on your database to identify whether answers to the common user queries actually exist in your corpus.

❗ Anecdotally, we actually discover that a significant part of issues with RAG systems are due to bad data, and therefore will not be resolved with improvements to the retrieval or generation. Unfortunately, garbage in - garbage out still stands.

‼️ This only works for focused corpora. Large-scale systems (think extensive databases, web search) make brute-force validation impractical. For those cases, rely on a strong user data onboarding processes, as well as user feedback loops to catch gaps over time.

Remember: You Don’t Need Ground Truth

The most important takeaway from this framework is that you can identify the majority of hallucinations without knowing the “correct” answer. By simply checking whether the generated response is supported by the retrieved documents, you catch extrinsic hallucinations, the most common type in production RAG systems.

This makes hallucination detection practical and scalable. You don’t need expensive human annotation or comprehensive ground truth datasets. You just need to systematically compare what the model generated against what it was given as context.

Ground truth becomes useful for deeper analysis (distinguishing between bad generation vs. bad data), but it’s not required for the core task of identifying when models fabricate information not present in their context.

Conclusion

As AI systems become more sophisticated and handle increasingly complex tasks, the temptation is to build equally complex evaluation systems. Don’t. The most effective approaches to hallucination detection are often the simplest: systematic frameworks that break down the problem into manageable pieces that you can automate away.

The two-stage evaluation method we’ve outlined —checking context support first, then evaluating retrieval quality— gives you a practical foundation for building reliable AI applications. Combined with proactive corpus auditing where feasible, these approaches help ensure your systems fail gracefully and improve systematically.

The goal isn’t perfect hallucination detection. It’s building systems that are transparent about their limitations, provide appropriate uncertainty signals, and continuously improve based on objective feedback.

Want to dive deeper into hallucination detection or discuss your specific use case? We’d love to hear from you! Shoot an email at julia@quotientai.co.

P.S. We’re building the next generation of AI monitoring that packages frameworks like these into specialized detectors: they monitor your systems and catch issues like hallucinations automatically. The platform is free to get started, self-serve, and integrates with just a few lines of code. We’re continuously improving based on feedback from teams using it in production, so if you’re tired of manually checking for hallucinations, feel free to check us out.