These systems are now used to parse SEC filings and earnings reports, extract and summarize risk factors, analyze client portfolios, flag potential compliance issues, and even generate draft reports for advisors and regulators. Some are embedded in client-facing experiences, where they answer questions about accounts, balances, and investment performance in real time. The outputs of these systems directly influence investment decisions, regulatory filings, and client communications. In this environment, the margin for error is effectively zero.

Even a single hallucination can cause outsized damage. If an agent fabricates a risk disclosure, misstates a client’s exposure, or attributes the wrong data to a compliance report, the consequences ripple outward: regulatory violations, loss of trust, and reputational harm.

The deeper problem is that AI systems often look confident, even when they are wrong. A portfolio insight or compliance summary may sound authoritative, but was it actually grounded in the right filings, transactions, or client records? In finance, that distinction is the difference between a reliable assistant and a liability.

Building Reliability into AI Financial Agents

The key to solving this is not just better prompts or larger models. What matters is visibility into how the agent arrives at its answers. AI agents generate traces that record the documents they retrieve, the steps they take, and the reasoning they follow. Buried inside those traces is the truth about whether the output was correct, compliant, and supported by evidence.

The challenge is that traces are massive and complex. Manually reviewing them is slow, expensive, and impossible to scale.

Quotient addresses this by ingesting traces directly from your AI agents and automatically analyzing them with expert detectors designed for financial contexts.

These detectors identify critical reliability issues such as:

- Hallucinations: when the model generates information not supported by filings, client records, or transaction data

- Attribution errors: when a claim is linked to the wrong section or dataset

- Irrelevant retrievals: when the agent pulls in the wrong context and bases its reasoning on it

Instead of relying on manual review or guesswork, Quotient surfaces these problems in real time. This makes it possible to build financial AI agents that are both powerful and reliable, capable of withstanding business and regulatory scrutiny.

In this blog, we show you how to build a production-ready financial analyst AI agent, with built-in Quotient tracing and monitoring. The code for the agent is open-source and available in the Quotient Cookbooks repo.

Prerequisites

You’ll need:

- Python 3.10 or newer

- API keys for OpenAI and Quotient AI

Install required packages:

pip install quotientai openai langchain langchain-openai langgraph yfinance opentelemetry-api

Set credentials in a .env file:

OPENAI_API_KEY=your-openai-key

QUOTIENT_API_KEY=your-quotient-key

Note: yfinance data is best effort and may be delayed or incomplete. Treat it as demo data for this tutorial.

Building the Financial Analyst Agent

We will build a small agent that can:

(1) fetch stock data, (2) compute simple returns, and (3) pull recent headlines.

We will instrument it with Quotient Traces to capture retrieval, tool usage, and reasoning, and to automatically detect hallucinations and document relevancy issues.

Define Tools

These tools keep behavior explicit and auditable. The agent should not make claims that the tools cannot support.

import yfinance as yf

def get_stock_data(ticker: str) -> str:

t = yf.Ticker(ticker.upper())

info = getattr(t, "info", {}) or {}

price = info.get("currentPrice", "N/A")

pe = info.get("trailingPE", "N/A")

mc = info.get("marketCap", "N/A")

hi = info.get("fiftyTwoWeekHigh", "N/A")

lo = info.get("fiftyTwoWeekLow", "N/A")

return (

f"{ticker.upper()} snapshot\n"

f"Price: {price}\n"

f"P/E: {pe}\n"

f"Market Cap: {mc}\n"

f"52W High: {hi}\n"

f"52W Low: {lo}"

)

def calculate_returns(ticker: str, period: str = "1mo") -> str:

"""Compute simple percent return using yfinance history."""

t = yf.Ticker(ticker.upper())

hist = t.history(period=period)

start = float(hist["Close"].iloc[0])

end = float(hist["Close"].iloc[-1])

pct = ((end - start) / start) * 100

return f"{ticker.upper()} {period} return: {pct:+.2f}% ({start:.2f} → {end:.2f})"

def get_company_news(ticker: str) -> str:

"""Return a few recent headlines if available."""

t = yf.Ticker(ticker.upper())

news = getattr(t, "news", []) or []

items = news[:3]

if not items:

return f"No recent headlines for {ticker.upper()}"

lines = [f"• {n.get('title','Untitled')} ({n.get('publisher','Unknown')})" for n in items]

return f"Latest for {ticker.upper()}:\n" + "\n".join(lines)

Initialize Quotient Traces

We turn on tracing and enable detectors. This ensures that every agent run produces a trace with spans, tool calls, and detection results.

from quotientai import QuotientAI, DetectionType

from openinference.instrumentation.langchain import LangChainInstrumentor

quotient = QuotientAI()

quotient.tracer.init(

app_name="financial-analyst",

environment="production",

instruments=[LangChainInstrumentor()],

detections=[

DetectionType.HALLUCINATION,

DetectionType.DOCUMENT_RELEVANCY

]

)

Build and Instrument the Agent

We use LangGraph’s ReAct-style agent, equipped with our financial tools and a system prompt that forces grounded answers. On top of that, we wrap the query function with Quotient Traces, so every execution is automatically recorded with spans, tool calls, and detection results.

from datetime import datetime

from typing import Dict, Any

from dotenv import load_dotenv

from langchain_openai import ChatOpenAI

from langgraph.prebuilt import create_react_agent

from langchain_core.messages import SystemMessage

from langchain_core.tools import tool

from opentelemetry.trace import get_current_span

from quotientai.tracing import start_span

from tools import get_stock_data, calculate_returns, get_company_news

load_dotenv()

# Wrap Python functions as LangChain tools

@tool

def tool_get_stock_data(ticker: str) -> str:

"""Fetch real-time stock data including price, P/E, and market cap"""

return get_stock_data(ticker)

@tool

def tool_calculate_returns(ticker: str, period: str = "1mo") -> str:

"""Calculate returns over a period: 1d, 5d, 1mo, 3mo, 1y"""

return calculate_returns(ticker, period)

@tool

def tool_get_company_news(ticker: str) -> str:

"""Get recent news headlines for a company"""

return get_company_news(ticker)

SYSTEM_PROMPT = SystemMessage(content="""

You are a financial analyst assistant.

Use the provided tools to ground every claim in tool output.

If a user asks for data the tools cannot provide, say it is unavailable.

Do not infer or fabricate ESG scores, emissions, or other unsupported facts.

""")

@quotient.trace("analyze-financial-query")

async def analyze_financial_query(query: str) -> Dict[str, Any]:

# Minimal, deterministic model config

model = ChatOpenAI(model="gpt-4o-mini", temperature=0.1)

# Create the agent with our tools

agent = create_react_agent(

model=model,

tools=[tool_get_stock_data, tool_calculate_returns, tool_get_company_news]

)

# Span: query preparation context

with start_span("query-preparation"):

span = get_current_span()

span.set_attribute("user.query", query)

span.set_attribute("query.ts_utc", datetime.utcnow().isoformat())

# Span: agent execution

with start_span("agent-execution"):

result = await agent.ainvoke({

"messages": [SYSTEM_PROMPT, {"role": "user", "content": query}]

})

# Extract final message content

content = result["messages"][-1].content if isinstance(result, dict) else str(result)

# Attach response diagnostics to the current span

span = get_current_span()

span.set_attribute("response.len", len(content))

span.set_attribute("response.preview", content[:160])

return {

"query": query,

"response": content

}

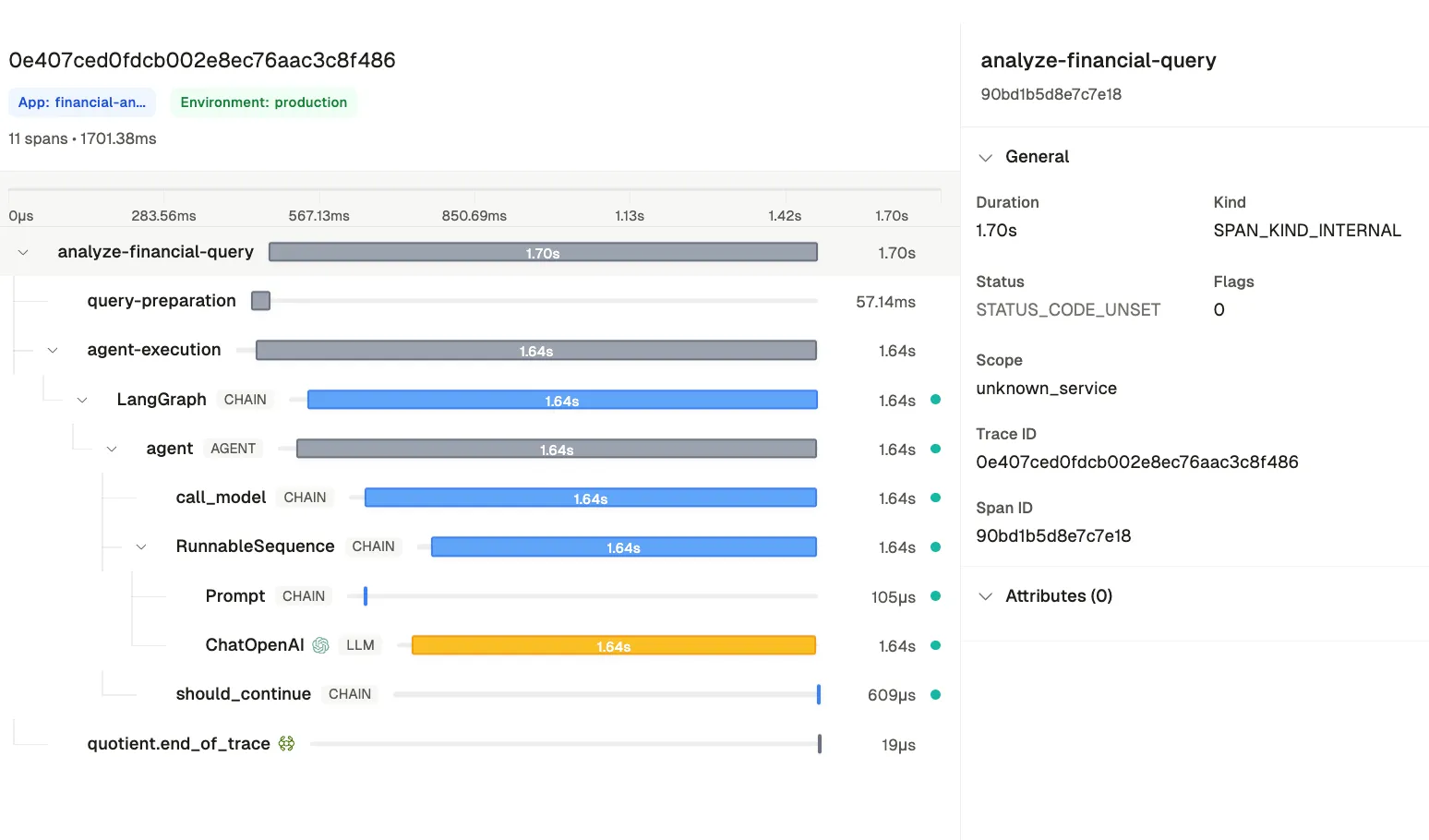

With the @quotient.trace decorator, the full context is captured automatically. For each query, you get:

- A trace ID to retrieve the execution later,

- Spans showing each step of the process,

- Tool calls and their outputs, and

- Detection results for hallucinations or irrelevant retrievals.

For example, when we ask:

"What’s Tesla’s ESG score and sustainability initiatives?"

Without tracing, there’s zero visibility: the agent might confidently fabricate an answer and all you’d see in logs is the final output. Even with tracing, the raw data is so complex that it’s nearly impossible to parse, even for a simple agent. Quotient ingests those traces, automatically analyzes them, and flags the critical issues in the agent’s run so you know exactly when and why something went wrong.

Analyze Traces

Once you have the trace ID, you can fetch it back into your workflow and examine spans, tool calls, and detections. You can also view it in the Quotient Dashboard:

def inspect(trace_id: str):

trace = quotient.traces.get(trace_id=trace_id)

for s in trace.spans:

print(f"- {s.name}: {s.duration_ms}ms")

print("Tool calls:", trace.tool_calls)

print("Detections:", trace.detections)

# Example:

# inspect("8fc5f7d4d1a24c0f8d3e1a9b2c0e1234")

A typical output might look like:

analyze-financial-query: 2150ms

query-preparation: 12ms

agent-execution: 2100ms

get_stock_data: 145ms

calculate_returns: 98ms

Detections:

hallucination: True

document_relevancy: 0.9

Instead of guessing where things went wrong, you can immediately see that the issue was not retrieval. The model generated unsupported claims despite relevant context.

In dashboard, the traces will look like this:

Conclusion

In financial services, accuracy is not optional. Every answer an AI agent produces must be grounded in the right data and able to withstand external scrutiny. Without that level of reliability, even the most sophisticated system becomes a liability.

Quotient makes AI reliability achievable. By ingesting agent traces and applying specialized detectors, it automatically surfaces hallucinations, attribution errors, and other critical issues. Teams gain the visibility to debug systematically rather than through trial and error, turning AI oversight into a disciplined, repeatable process.

The result is financial AI agents that can be trusted: delivering accurate insights, meeting compliance obligations, and reinforcing confidence with clients, regulators, and stakeholders. With Quotient, firms move beyond experimentation and put AI to work responsibly at the core of their business.